🧮 The Mayo Clinic's new AI supercomputers

Cerebras Systems, purpose-built chips, and the incentives around AI infrastructure

On Tuesday, the Mayo Clinic announced they would use computers, infrastructure, and technology from Cerebras Systems to “develop AI models for the healthcare industry.”

And, since I am at the beginning of learning how AI will impact healthcare, this naturally led me down a rabbit hole of questions, beginning with who exactly is Cerebras Systems, why does this matter, and what do they really mean by “develop AI models”?

All of this was to try to answer a larger question I’ve had since the deluge of AI-related healthcare tools began sometime last year: how much does the tech itself really matter, and will big health systems and companies be able to leverage it just as easily as smaller ones?

But first: who is Cerebras Systems?

They’re a chip maker, like Nvidia. Except while Nvidia has been making chips for computers for a long time and started adapting them to handle AI-related tasks, Cerebras is a newer company and is therefore trying to do the same thing from scratch.

In other words, their chips are purpose-built specifically for AI.

Why does that matter? Because AI requires a very specific kind of computing power, which is the ability to perform a huge number of (relatively) simple tasks, all in parallel. This is as opposed to a chip that would be designed to do one, really complicated task really fast—think of a crazy hard math problem that needed to be solved with a huge degree of precision. In contrast, training and running a large language learning model (LLM) is the opposite: you need to do billions of smaller problems, all at the same time.1

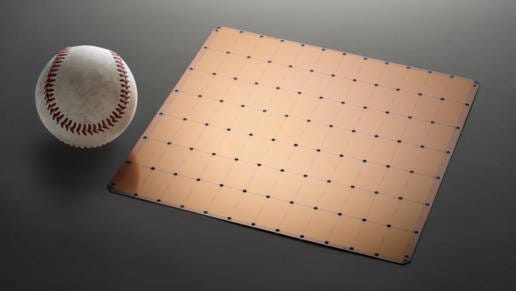

To do this, Cerebras created a “wafer chip,” which is a bunch of chips all physically connected into a big wafer about the size of a dinner plate. Here’s what it looks like:

Making these chips work is super hard. Per a 2019 NY Times story that covered the launch of the wafer chip:

A.I. systems operate with many chips working together. The trouble is that moving big chunks of data between chips can be slow, and can limit how quickly chips analyze that information…

Working with one big chip is very hard to do. Computer chips are typically built onto round silicon wafers that are about 12 inches in diameter. Each wafer usually contains about 100 chips.

Many of these chips, when removed from the wafer, are thrown out and never used. Etching circuits into the silicon is such a complex process, manufacturers cannot eliminate defects. Some circuits just don’t work. This is part of the reason that chip makers keep their chips small — less room for error, so they don’t have to throw as many of them away.

Cerebras said it had built a chip the size of an entire wafer.

About a year later, Cerebras announced it had set a record for training the largest Natural Language Processing (NLP) model on a single device—20 billion parameters.

This matters for two reasons.

First: AI tools (like ChatGPT) work because they’ve learned the meaning of words in context. You don’t just upload a dictionary, you upload whole articles, books, and libraries. Thus, the more context—i.e. parameters—you can give the AI, the better it will be at understanding the meaning.

For example:

Imagine hearing the expression “To be or not to be” without context, just using a dictionary. And then imagine understanding it within the context of Act II, Scene 1 of Hamlet. And then imagine if you had broader context and could understand it within the context of the entire play – or better yet, within the context of all Shakespearian literature. As the context within which understanding occurs is broadened, so too is the precision of the understanding. By vastly enlarging the context (the sequence of words within which the target word is understood), Cerebras enables NLP models to demonstrate a more sophisticated understanding of language. Bigger and more sophisticated context improves the accuracy of understanding in AI.

But so what? Why does it matter that Cerebras’ tech can handle billions of parameters all at once? Can’t you just upload the parts of the library one by one?

Well you could, but it would take longer. This is the second reason why Cerebras’ chips matter: they can do it faster than other systems.

How much faster?

The AI infrastructure business model and why it matters

In November 2022, Cerebras partnered with a cloud computing service provider called Cirrascale and announced a “pay-per-model” service.

This put a price on creating a ChatGPT-like model:

Prices, ranging from $2,500 dollars to train a 1.3-billion-parameter model of GPT-3 in 10 hours to $2.5 million to train the 70-billion-parameter version in 85 days, are on average half the cost that users would pay to rent cloud capacity or lease machines for years to do the equivalent work. And the CS2 clusters can be eight times as fast to train as clusters of Nvidia A100 machines in the cloud.

Half the cost, but eight times as fast.

That’s a pretty big leap forward.

So, now we know that you can rent processing power that is custom-built to handle LLMs, NLPs, and essentially train and build whatever kind of AI you want.

Enter Mayo Clinic.

Why The Mayo Clinic is building AI models

According to the announcement, The Mayo Clinic plans to “tap into decades of anonymized medical records and data” to do two things: summarize medical records and read imaging.

This isn’t surprising. Both of these tasks are well-suited to LLMs—asking ChatGPT to summarize a lengthy, dense piece of text was among its first, and most obvious use cases.

Meanwhile, we’ve been hearing about AI’s capacity to analyze and interpret radiology imaging for years.2 The idea is to “scour images for patterns that the human eyes of trained medical experts may not detect.”

The Mayo Clinic won’t just use these models for its own patients and research, however—the ultimate plan is to charge smaller systems and hospitals for access. So a community hospital without the resources (or the incentive) to develop a huge AI model for radiology can still do imaging, but pay a fee to send the images to The Mayo Clinic and get the AI analysis in return.

Use cases: life sciences vs. clinical care

So, what is to stop a medium-sized healthcare system, or a large physicians group from buying some processing space on Cerebras’ systems and training their own LLM? Well, nothing is stopping them—the question is whether it’s worth it.

Physician groups sell to hospitals, and hospitals need a financial justification for everything, and be able to read radiology images slightly faster doesn’t cut it, at least not yet. Unless the hospital can actually hire one less radiologist or bill for the faster analysis, they’re likely to just keep the system they have.

Right now, we have a lot of hospitals and physician groups interested in helping doctors avoid paperwork, and that’s great. I hope the LLMs can get good enough to listen and write notes and help these companies bill more efficiently. At the same time, I am looking forward to seeing more applications for AI in clinical care beyond “read and summarize documents.”

(As noted last week, I’m interested in seeing generative AI do patient intake).

Meanwhile, the biomedical research applications are huge:

LLMs are great at recognizing patterns in huge amounts of data—for example, patterns in DNA sequences. Researchers can use these models to better understand genetic variations and mutations.

Researchers will also be able to analyze genomic and clinical data faster, and more of it, including uncovering patterns and relationships between different types or modes of data.

They will also be able to do more predictive modeling, including understanding diseases like cancer, which involve genetic mutation. It seems we have just started to scratch the surface of applications for personalized medicine in complex situations.

And of course, drug makers are salivating at the opportunity to discover new relationships between chemical structures and biological outcomes. It’s likely we’ll see an explosion in AI-assisted pharmaceutical research.

Many of these uses could have huge financial payoffs.

In contrast, making the doctor’s note more efficient feels like nibbling around the margins. It could make doctor’s lives much better, but I don’t see how it creates huge amounts of new value in care delivery.

That’s why it makes sense that a huge research institute like Mayo has the incentive to build its own cutting-edge AI model—meanwhile, a group focused solely on care delivery seems more likely to buy their purpose-built tools from partners rather than develop their own.

For my general understanding on this topic, I am grateful to my step-father for educating me: he is a technologist who works at a world-leading data storage company and who has been immersed in the fine details of engineering complex computer systems for four decades.

It might seem odd to use a “language” processing model to analyze images, but the concept is the same: train a system on lots of data, in context, and then teach it to analyze new data. The meaning of pixels in the context of images is not substantively different from the meaning of words in the context of paragraphs, articles, and books.