👨🏻⚕️ A reasonable imitation of a doctor

AI, healthcare disruption, and the most interesting conversation I had in 2023

Hey there! After a 2-year hiatus (I know), I'm back.

There have been a few changes since you last saw me, namely that I am re-focusing this newsletter to write about the thing that is most interesting to me in healthcare right now: the AI disruption.

I am attempting to answer a few questions:

Will AI actually disrupt healthcare—if so, where?

What jobs can AI do better than humans (including patient care), and thus who will lose their jobs?

And finally, what is AI-related hype vs. what is true disruption that could help certain companies and business models?

If you like this or think it’s important, I hope you’ll share.

I. “Pretend you’re an emergency physician”

The most interesting conversation I had last year was with an emergency medicine physician.

ChatGPT was just a few months old, and I was an early adopter of using it in my marketing work. I could see it was a terrible writer, but in other areas, ChatGPT astonished me at how useful it could be.

I shared screen to the familiar ChatGPT welcome page where it asks for a prompt and told it to pretend it was an emergency physician who was taking my history: “My chief complaint is chest pain. What do you ask me?”

And then it started responding:

Hello, I’m Dr. ChatGPT, an emergency physician. I’m sorry to hear you’re experiencing chest pain. Can you please tell me more about your symptoms?

When did your chest pain start?

Can you describe the pain? Is it sharp, dull, crushing, burning, or squeezing?

Is the pain localized or does it radiate to any other part of your body such as your arms, jaw, or back?

How severe is the pain on a scale of 0-10, with 0 being no pain and 10 being the worst pain imaginable?

Have you had chest pain before, and if so, how does this episode compare to previous episodes?

Are you experiencing any shortness of breath, sweating, nausea or vomiting, palpitations, or dizziness?

Have you had any recent surgeries or medical procedures, or been diagnosed with any medical conditions?

Are you taking any medications of supplements? If so, which ones?

Have you hever had a heart attack or been told you have heart disease?

Do you have a family history of heart disease or any other medical conditions that could be related to your symptoms?

Just ChatGPT’s ability to come up with that list of questions had the doctor’s attention. He said they were pretty much what he would ask as well.

I asked how a fairly typical patient might respond, one he might encounter on an average day in the ER. I typed his suggested responses into ChatGPT: the pain started two days ago. It comes and goes and lasts for about five minutes each time. It feels more crushing, like something heavy on my chest. I do have a history of coronary artery disease, and I used to smoke cigarettes.

Even a layperson probably knows we are looking at a possible heart attack situation. But here’s how ChatGPT responded:

Thank you for sharing that information with me. Given your history of coronary artery disease and your current symptoms, it’s important that we take this seriously and act quickly.

First, I would like to perform an electrocardiogram (ECG) to assess the electrical activity of your heart and see if there are any changes suggestive of a heart attack. I would also like to order blood tests to check for cardiac biomarkers that may indicate damage to the heart muscle.

In addition to these tests, I would like to know more about your current medications and whether you have taken any medications recently that could be contributing to your symptoms. We may need to adjust your medications or provide additional treatments to manage your chest pain and prevent any further complications.

Given your history of smoking, it’s also important to assess your lung function and rule out any potential respiratory causes of your chest paint.

At this point, I would recommend that we admit you to the hospital for further evaluation and treatment. We will continue to monitor your condition and take any necessary steps to ensure that you receive the best possible care.

The real-life emergency doctor had to admit: it’s almost exactly what he would have said and done.

II. How accurate is ChatGPT in clinical diagnosis?

Recent research within the last 18 months has shown that ChatGPT demonstrates significant accuracy in medical diagnosis, though it is not yet on par with human doctors in all aspects.

A 2022 study conducted by Mass General Brigham found that ChatGPT had an overall accuracy of about 72% in clinical decision-making, ranging from initial diagnosis to final conclusions and management decisions. When it came to making a final diagnosis, ChatGPT was 77% accurate.

Another study published in April 2023 tested ChatGPT for diagnostic accuracy on a total of 50 clinical case vignettes including 10 rare case presentations. Researchers found that ChatGPT 4 solved all common cases within two suggested diagnoses, but that it needed eight or more suggestions to solve 90% of the rare cases.

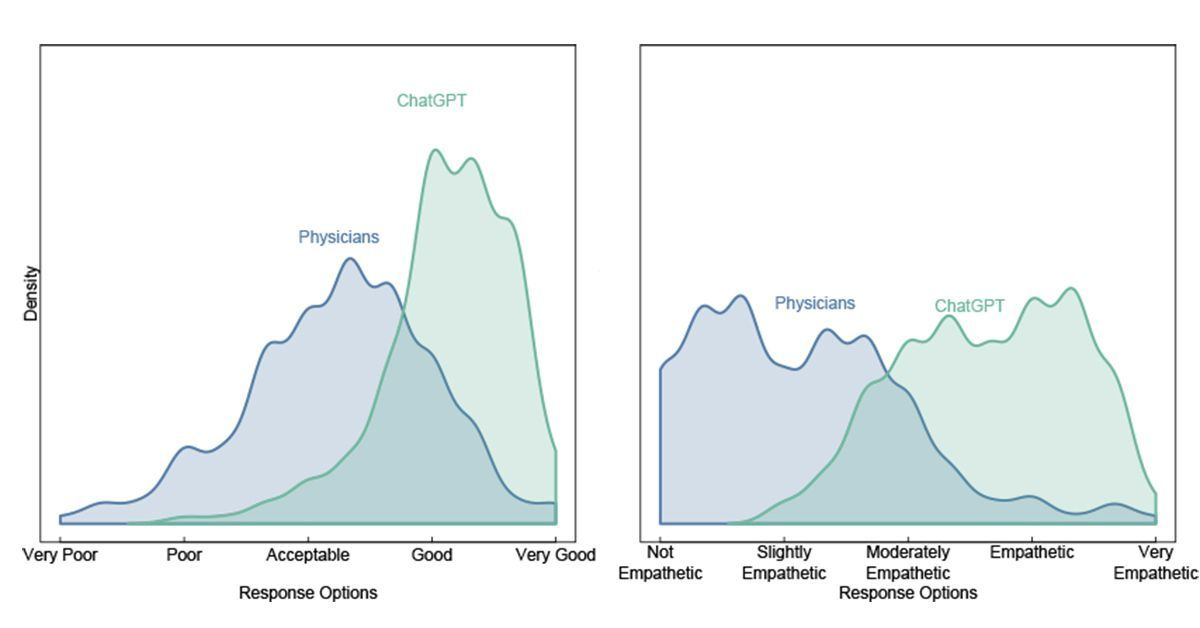

Another April 2023 study, published in JAMA, compared the responses of ChatGPT to real-world patient questions with responses from actual physicians. The researchers blinded the answers and then rated them both on quality and empathy—ChatGPT outperformed the physicians on both measures:

On the other hand, ChatGPT still hallucinates, especially in complex situations.

Researchers at Brigham and Women's Hospital in Boston recently found that cancer treatment plans generated by ChatGPT were full of errors (although, don’t oncologists often disagree about what is the best treatment plan?). Their study was published in Jama Oncology in August.

III. ChatGPT and clinical efficiency

The median number of patients per hour seen by emergency medicine physicians hovers around 2.1, depending on factors such as acuity, ED flow, and staffing limitations.

Meanwhile, I once heard the CEO of a national group suggest that—in an ideal world—an ER doc should be able to see 10 patients per hour.

Yes, there were audible gasps in the room when he said it.

But just imagine you are an ER physician.

Patients come into the emergency department. A nurse in triage takes their details, logs a chief complaint, and shows them directly to a room.

Next, a friendly ChatBot on a screen starts asking the patient questions. The ChatBot speaks in a soothing, empathetic, and precise voice. This ChatBot never gets tired and works 24 hours a day, seven days a week. It is routinely audited for errors, and those errors are incorporated into its training to improve for the future.

The patients answer in their natural voice, and the ChatBot asks follow-up questions if necessary. Depending on the ChatBot’s degree of certainty, it then begins sorting: definitely discharge, definitely admit, real-life doctor needed, need tests ordered… you get the idea.

Each hospital’s processes and willingness to embrace the capability will be different, but the capability for this kind of thing is coming.

The physician can monitor patient flow, order tests, and coordinate care all from a central location. They can only go to see the patients the ChatBot flags as requiring additional intervention, based on protocols and criteria set by the hospital. The AI writes the note, extracts the billing codes, and submits the paperwork.

With all the technology working seamlessly, why couldn’t a physician see ten patients per hour?

If there are barriers to this future, they likely have little to do with the tech itself, and everything to do with incentives, payments, interest groups, and bureaucracy. Will doctors feel threatened? Yes, definitely. Will groups be able to bill for ChatBot services? Unknown. Will patients and/or regulators recoil at the thought of an AI stepping into patient care? Time will tell.

But it won’t be the tech holding U.S. healthcare back from greater efficiency.

IV. Billing & Coding

At the end of the day, it’s hard to escape the fact that hospitals, health systems, and physician groups are often laser-focused on how to get better at billing and coding.

Politico published a nice piece (AI’s big test: Making sense of $4 trillion in medical expenses) a few days ago on AI’s application to billing and coding, or processing the “the $4 trillion in medical expenses Americans accrue each year.”

And, as usual, there’s an arms race against the insurers:

For providers, the dream is an AI tool that can quickly and aggressively code procedures and file claims. Insurers — and the government agencies that pay for health care — want comparable technology to scrub those bills.

“Everyone’s trying to maximize revenue while toeing the line on, effectively, fraud,” said Nick Stepro, chief product and technology officer at Arcadia, a company that works with health care organizations on both sides of the divide looking to build the technology.

Not surprisingly, both UnitedHealth and Humana are already being sued over their use of AI to restrict care.

V. Predictions & Recollections

Chief Healthcare Executive has a roundup of predictions of the role of AI in the coming year. And Maxime Vermeir of the automation company ABBYY flags three pitfalls for healthcare groups adopting AI solutions in the coming year.

Meanwhile, Becker’s HealthIT has a roundup of how the largest health systems used AI in 2023. The most interesting is HCA’s partnership with Google to speed nurse handoffs by having generative AI listen to the patient interaction and then write the doctor’s note.

Per Becker’s: “In the four HCA ERs, physicians have hands-free devices outfitted with the Augmedix medical transcription app that record their interactions with patients. The data is then fed through Google's PaLM large language model, instantly creating a medical note that the providers review before it's uploaded to the EHR. HCA plans to scale the program to other departments and hospitals.”

Until next time,

Russell Max Simon | LinkedIn

Founder & Chief Corporate Storyteller, HealthPolity